http://stackoverflow.com/a/10467342/2041023

Two of the best references are

- NVIDIA Fermi Compute Architecture Whitepaper

- GF104 Reviews

I'll try to answer each of your questions.

The programmer divides work into threads, threads into thread blocks, and thread blocks into grids. The compute work distributor allocates thread blocks to Streaming Multiprocessors (SMs). Once a thread block is distributed to a SM the resources for the thread block are allocated (warps and shared memory) and threads are divided into groups of 32 threads called warps. Once a warp is allocated it is called an active warp. The two warp schedulers pick two active warps per cycle and dispatch warps to execution units. For more details on execution units and instruction dispatch see 1 p.7-10 and 2.

D'. There is a mapping between laneid (threads index in a warp) and a core.

E'. If a warp contains less than 32 threads it will in most cases be executed the same as if it has 32 threads. Warps can have less than 32 active threads for several reasons: number of threads per block is not divisible by 32, the program execute a divergent block so threads that did not take the current path are marked inactive, or a thread in the warp exited.

F'. A thread block will be divided into WarpsPerBlock = (ThreadsPerBlock + WarpSize - 1) / WarpSize There is no requirement for the warp schedulers to select two warps from the same thread block. G'. An execution unit will not stall on a memory operation. If a resource is not available when an instruction is ready to be dispatched the instruction will be dispatched gain in the future when the resource is available. Warps can stall at barriers, on memory operations, texture operations, data dependencies, ... A stalled warp is ineligible to be selected by the warp scheduler. On Fermi it is useful to have at least 2 eligible warps per cycle so that the warp scheduler can issue an instruction.

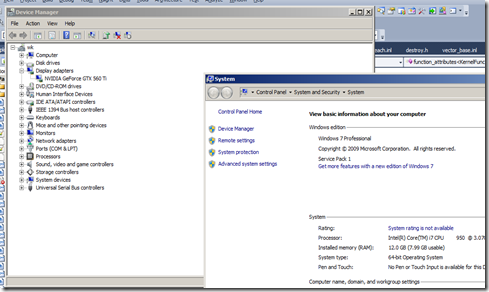

See reference 2 for differences between a GTX480 and GTX560.

If you read the reference material (few minutes) I think you will find that your goal does not make sense. I'll try to respond to your points.

1'. If you launch kernel<<<8, 48>>> you will get 8 blocks each with 2 warps of 32 and 16 threads. There is no guarantee that these 8 warps will be assigned to different SMs. If 2 warps are allocated to a SM then it is possible that each warp scheduler can select a warp and execute the warp. You will only use 32 of the 48 cores.

2'. There is a big difference between 8 blocks of 48 threads and 64 blocks of 6 threads. Let's assume that your kernel has no divergence and each thread executes 10 instructions.

8 blocks with 48 threads = 16 warps * 10 instructions = 160 instructions 64 blocks with 6 threads = 64 warps * 10 instructions = 640 instructions

In order to get optimal efficiency the division of work should be in multiples of 32 threads. The hardware will not coalesce threads from different warps.

3'. A GTX560 can have 8 SM * 8 blocks = 64 blocks at a time or 8 SM * 48 warps = 512 warps if the kernel does not max out registers or shared memory. At any given time on a portion of the work will be active on SMs. Each SM has multiple execution units (more than CUDA cores). Which resources are in use at any given time is dependent on the warp schedulers and instruction mix of the application. If you don't do TEX operations then the TEX units will be idle. If you don't do a special floating point operation the SUFU units will idle.

4'. Parallel Nsight and the Visual Profiler show a. executed IPC b. issued IPC c. active warps per active cycle d. eligible warps per active cycle (Nsight only) e. warp stall reasons (Nsight only) f. active threads per instruction executed The profiler do not show the utilization percentage of any of the execution units. For GTX560 a rough estimate would be IssuedIPC / MaxIPC. For MaxIPC assume GF100 (GTX480) is 2 GF10x (GTX560) is 4 but target is 3 is a better target.